In the universe of Business Intelligence modern world, handling large volumes of data efficiently is a constant challenge. The Microsoft Fabric emerges as a unified platform that simplifies data architecture, and its integration with the Apache Spark is at the heart of its large-scale data processing capability.

In this post, we will explore how this powerful combination works. We will start by understanding the role of Spark in distributed processing and its main advantages, and then we will show how easy it is to set up and optimize a Spark cluster within the Fabric environment.

1. Overview of Microsoft Fabric

Microsoft Fabric consolidates functionalities such as Data Warehousing, Data Engineering, real-time processing, Data Science, and Machine Learning into a single platform, making integrated information management easier.

In the realm of Data Engineering, the use of Spark is central. Spark is a distributed processing technology that executes tasks in parallel, optimizing performance in scenarios with large volumes of data. In Fabric, Spark comes pre-integrated—no additional installation is required—and clusters are automatically managed by the service, allowing dynamic scalability according to the workload.

2. How Does Spark Work?

Apache Spark is a distributed processing engine that operates through a master-worker architecture with inherent parallelism, allowing the processing of large volumes of data across multiple machines in a coordinated manner.

2.1. Parallelism Architecture in Spark

Spark operates on a hierarchical architecture composed of two main types of nodes: the master node (also referred to as driver) and the worker nodes (which execute executors). This distribution allows Spark to break down complex tasks into smaller operations that are executed in parallel.

The driver node acts as the central coordinator of the cluster, being responsible for:

- Analyzing, distributing, and scheduling tasks among the executors

- Maintaining the SparkContext, which represents the connection to the Spark cluster

- Monitoring execution progress and ensuring fault tolerance

The worker nodes contain the executors, which are processes responsible for the actual execution of tasks. Each executor has two main responsibilities:

- Executing the code assigned by the driver/worker node

- Reporting the progress of computations back to the driver node

2.2. How Parallelism Works

Parallelism in Spark is achieved by dividing the data into partitions distributed across the different nodes of the cluster. Each partition is processed independently by different threads, allowing simultaneous operations. For example, if a dataset is divided into multiple 128MB partitions, different executors can process these partitions in parallel, maximizing the use of computational resources.

Spark creates a DAG (Directed Acyclic Graph) to schedule tasks and orchestrate the worker nodes in the cluster. This mechanism allows for optimizing the sequence of operations and facilitates recovery in case of failures by replicating only the necessary operations on the data from a previous state.

3. Spark Configurations in Microsoft Fabric

3.1. Prerequisites

- Access to the Microsoft Fabric portal with the necessary permissions (admin, contributor, or member)

- Previously purchased Fabric SKU OR an active Fabric Trial

3.2. Configuration Steps

Pre-warmed Cluster Configuration

Microsoft Fabric offers Starter Pools that use clusters pre-warmed running on Microsoft virtual machines to significantly reduce startup times. These clusters are always active and ready for use, providing Spark session initialization typically within 5 to 10 seconds, without the need for manual configuration.

Starter Pools use medium-sized nodes that scale dynamically based on the needs of Spark jobs. When there are no dependencies on custom libraries or custom Spark properties, sessions start almost instantly because the cluster is already running and requires no provisioning time.

However, there are scenarios where the startup time may be longer:

- Custom libraries: Adds 30 seconds to 5 minutes for session customization

- High regional usage: When Starter Pools are saturated, it may take 2–5 minutes to create new clusters

- Network options: Private Links or Managed VNets disable Starter Pools, forcing on-demand creation

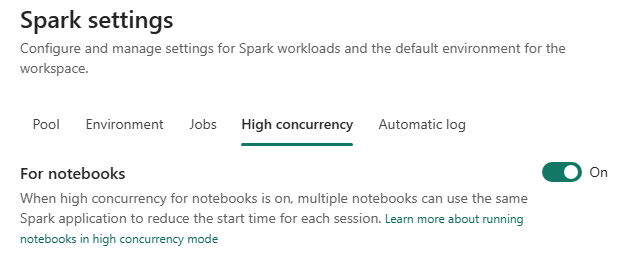

High Concurrency Mode Activation

It is recommended to enable the High Concurrency Mode to allow multiple notebooks to share the same Spark session, optimizing resource usage and drastically reducing startup times. In custom high-concurrency pools, users experience significantly faster session startup compared to standard Spark sessions.

To enable High Concurrency Mode:

- Access the Workspace Settings

- Navigate to Data Engineering/Science > Spark Settings > High Concurrency

- Enable the option For notebooks

Recommended Specifications for Pools

To illustrate the ideal configurations, let's consider an example with SKU F64:

Base Capacity:

- F64 = 64 Capacity Units = 128 Spark VCores

- With a burst factor of 3x = 384 maximum Spark VCores (the burst factor multiplies the available processing capacity to enhance performance)

Recommended Configuration for Custom Pool:

| Parameter | Valor Recomendado | Explanation |

|---|---|---|

| Node Family | Memory Optimized | Suitable for data processing workloads |

| Node Size | Medium (8 VCores) | Balance between performance and concurrency |

| Autoscale | Enabled (min: 2, max: 48) | 48 nodes × 8 VCores = 384 VCores (maximum burst) |

| Dynamic Allocation | Enabled | Allows automatic adjustment of executors based on demand |

Dynamic Allocation Configuration:

- Min Executors: 2 (baseline for immediate availability)

- Max Executors: 46 (reserving 2 nodes for driver and overhead)

- Initial Executors: 4 (balance between startup time and resource waste)

Scaling Based on SKU:

For different SKUs, the maximum configurations vary:

| SKU | Capacity Units | Max Spark VCores | Recommended Node Size | Max Nodes |

|---|---|---|---|---|

| F2 | 2 | 12 | Small | 3 |

| F8 | 8 | 48 | Medium | 6 |

| F16 | 16 | 96 | Medium | 12 |

| F64 | 64 | 384 | Medium/Large | 48/24 |

| F128 | 128 | 768 | Large | 48 |

Configuration Through the Portal

In the Microsoft Fabric portal:

- Go to the section Data Engineering > Spark Settings

- Select New Pool to create a custom cluster

- Set the Node Family and Node Size according to the requirements

- Configure Autoscale with a minimum number of nodes = 1 (Fabric ensures recoverable availability even with a single node)

- Enable Dynamic Executor Allocation for automatic resource optimization

Integration with Data Sources:

- Use native connectors to establish connections to Data Lakes or Data Warehouses

- Ensure that credentials and security settings are correctly configured

Notebook and Task Configuration:

- Configure notebooks (Python or Scala) for developing transformation scripts

- Schedule batch tasks or configure streaming processes according to requirements

Custom pools have a autopause default of 2 minutes, after which sessions expire and clusters are deallocated, with charges applied only for active usage time.

Of course, the ideal cluster settings may vary depending on the processes to be executed. An evaluation accompanied by tests should always be conducted for each case.

4. Best Practices and Technical Considerations

Sizing and Optimization

Properly sizing the cluster is essential. Consider:

Burst Factor: Determine the required instant scalability capacity to handle processing peaks. The logic should include multiplying the number of VCores used in the selection to achieve the required burst factor. For example, for an F64 SKU (128 base VCores), configure the pool up to 384 VCores by adjusting the number of nodes and node size (e.g., Medium nodes (8 VCores each) × 48 nodes = 384 VCores).

Number of Cores and Memory: For the driver node, select an appropriate number of cores and memory, as it orchestrates the processing and must support cluster management tasks. For worker nodes, the choice should be based on multiplying the resources (cores and memory) needed for parallel processing. Consider the possibility of scaling these nodes to adjust performance according to the workload.

Automation and Scheduling

Automate recurring processes through scripts and scheduling, ensuring consistency and minimizing errors.

Monitoring

Use Fabric’s native monitoring tools to identify potential issues and adjust cluster performance in real time.

Security

Ensure the implementation of robust security policies by configuring permissions and using secure connections for data access.

What are the next steps?

B2F has solid experience in developing solutions on Microsoft Fabric, as well as implementing Spark-based processes. If you need expert support to maximize the performance and efficiency of your analytics platform, our team is ready to collaborate with you and find the best solutions for your challenges.